Understanding Multithreading in C++

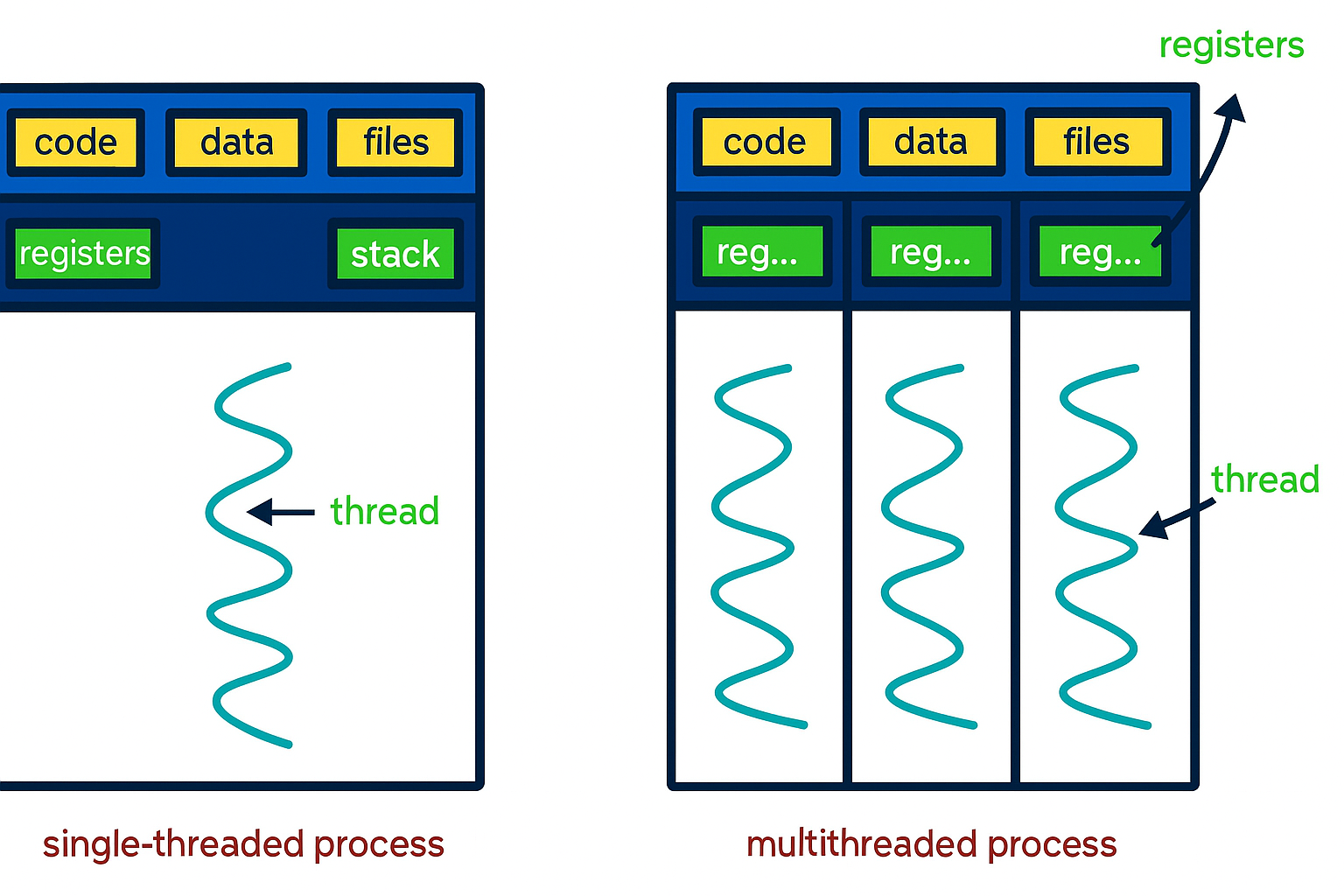

Multithreading is a programming approach where a single program is split into multiple smaller parts called threads. Each thread executes independently but can access shared resources like memory. This allows the program to perform multiple tasks at the same time, which can lead to better performance by making use of multiple CPU cores.

In C++, support for multithreading was added starting from the C++11 standard. This was made possible through the

How to Create a Thread in C++

In C++, the std::thread class is used to create and manage threads. When you create an object of this class, a new thread starts running the function or callable you provide.

The basic syntax looks like this:

std::thread threadName(callable);

threadNameis the name you give to your thread object.callablerefers to any callable entity like a function pointer, a lambda, or a functor that defines what the thread will execute.

Example of Creating and Running a Thread in C++

#include

#include

using namespace std;

// This function will run in a separate thread

void func() {

cout << "Hello from the thread!" << endl;

}

int main() {

// Create a thread that runs the function 'func'

thread t(func);

// Wait for the thread 't' to finish before continuing

t.join();

cout << "Main thread finished." << endl;

return 0;

}

What’s happening here?

- We define a function

functhat prints a message. - We create a thread

tthat runs this function independently. - The

t.join()line makes sure the main program waits for the thread to complete before continuing. - Finally, the main thread prints its own message.

Output:

Hello from the thread!

Main thread finished.

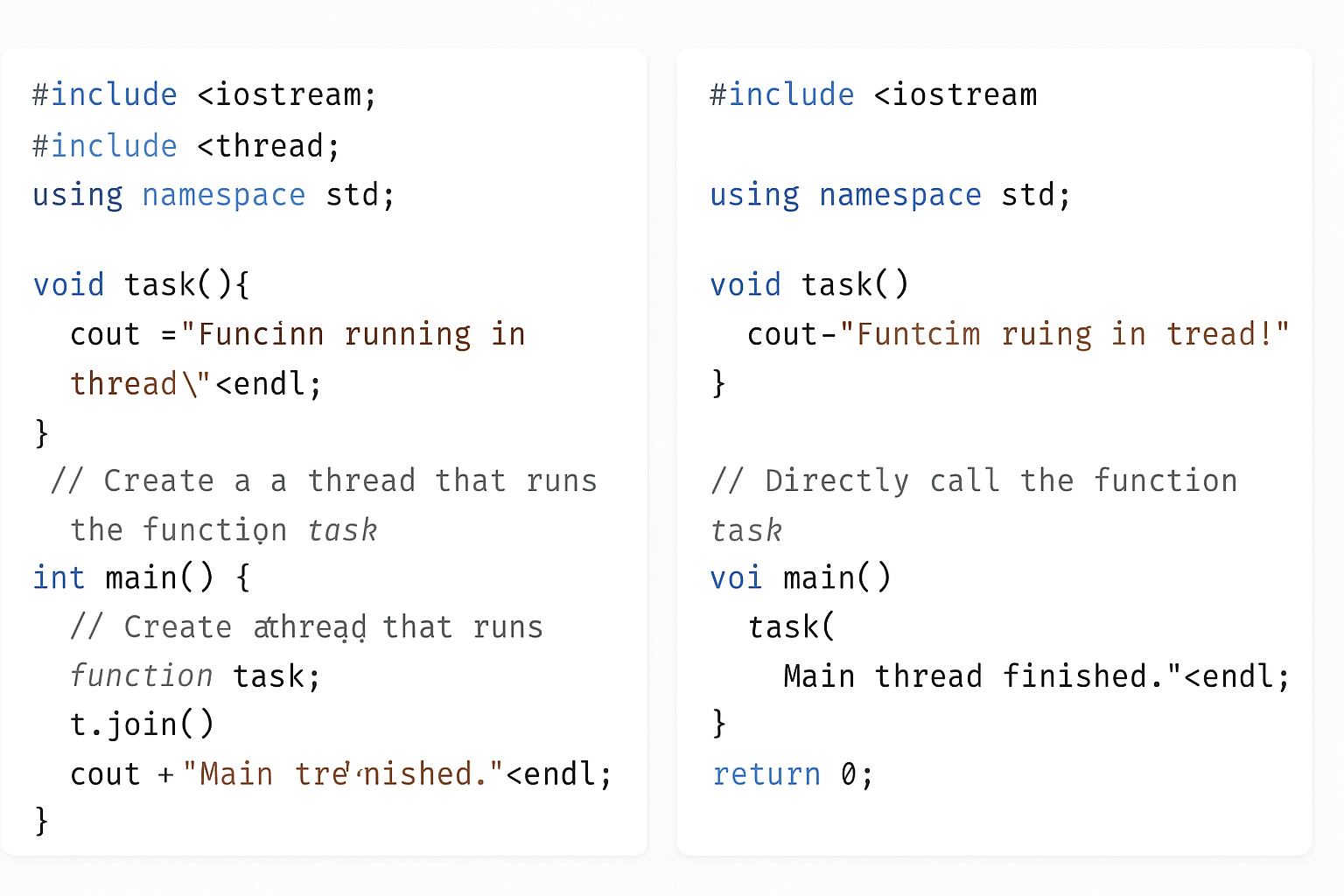

Running Code With and Without Threads

You’ll see how the same piece of code behaves when run without using threads and when run inside a separate thread. We will use simple examples to help beginners understand how threads allow your program to do multiple tasks at the same time, making your programs faster and more efficient. By the end, you will know how to create a thread in C++ and see the practical difference between running code sequentially versus concurrently

Code without threading:

#include

#include

#include

void task() {

for (int i = 1; i <= 5; ++i) {

std::cout << "Task running: " << i << std::endl;

std::this_thread::sleep_for(std::chrono::milliseconds(500)); // Simulate work

}

}

int main() {

std::cout << "Starting task without thread..." << std::endl;

task(); // Running task function directly (blocking)

std::cout << "Task completed without thread." << std::endl;

return 0;

}

Code with threading:

#include

#include

#include

void task() {

for (int i = 1; i <= 5; ++i) {

std::cout << "Task running: " << i << std::endl;

std::this_thread::sleep_for(std::chrono::milliseconds(500)); // Simulate work

}

}

int main() {

std::cout << "Starting task with thread..." << std::endl;

std::thread t(task); // Run task in a separate thread

// Main thread continues here immediately

std::cout << "Main thread continues while task runs..." << std::endl;

t.join(); // Wait for the thread to finish before exiting

std::cout << "Task completed with thread." << std::endl;

return 0;

}

What happens:

- Without thread: The program waits until

task()completes before moving on. - With thread: The program starts

task()in a new thread and the main thread continues executing immediately. Thejoin()waits for the thread to finish before the program ends.

What is a Callable in C++ Threads?

When you create a thread in C++, you pass a callable to it. A callable is anything that can be called like a function, and the thread will execute this callable in parallel.

For example:

thread t(func); // Runs the function 'func' in a new threadYou can also pass arguments to the callable when creating the thread:

void printNumber(int num) {

cout << "Number: " << num << endl;

}

thread t(printNumber, 10); // Runs printNumber(10) in the thread

Types of Callables You Can Use with Threads in Multithreading

In C++, callables fall into four main categories:

- Function: A regular function like

funcorprintNumber. - Lambda Expression: An anonymous function defined inline.

- Function Object: An object with the

operator()defined, so it behaves like a function. - Member Function: A function that is part of a class, either static or non-static.

Callables in C++ Threads

When you create a thread in C++, you give it something called a callable — this is basically “something you can call like a function.” The thread runs that callable independently.

There are four common types of callables you can use in threads:

1. Function

What is it?

A function is like a small reusable machine inside your program. It is a named block of code that performs a specific task. You write the function once, and then you can call (or use) it anytime by its name instead of rewriting the same code again and again.

Functions help keep your code clean and organized, especially when working with more complex concepts like multithreading. In multithreading programming, you use functions to let multiple parts of your program run at the same time independently. This makes your programs faster and more efficient by doing many tasks simultaneously.

Example

#include

#include

using namespace std;

void sayHello() {

cout << "Hello from function!" << endl;

}

int main() {

thread t(sayHello); // Create thread running sayHello()

t.join(); // Wait for thread to finish

cout << "Main thread done." << endl;

return 0;

}

What happens? The thread runs sayHello and prints a message separately while the main thread waits for it to finish.

2. Lambda Expression

What is it?

What is a Lambda Expression?

A lambda expression is an anonymous, inline function that you can write directly where you’d normally pass a function object, pointer, or functor.

Syntax (C++):

[ capture_list ] ( parameter_list ) -> return_type {

// body

};

- capture_list → which external variables you want to use inside the lambda.

- parameter_list → arguments (like in any function).

- return_type → optional; deduced if omitted.

- body → statements executed when called.

Why Lambdas in Multithreading?

When creating threads (e.g. using std::thread in C++), you often want to pass a function for the thread to run. Instead of writing a separate function or a functor class, lambdas let you:

- write the thread’s code inline

- capture variables from your current scope

- reduce boilerplate code

Example

Without Lambda:

#include

#include

void printHello() {

std::cout << "Hello from thread!" << std::endl;

}

int main() {

std::thread t(printHello);

t.join();

}

With Lambda:

#include

#include

int main() {

std::thread t([] {

std::cout << "Hello from thread!" << std::endl;

});

t.join();

}

Passing Variables via Capture:

#include

#include

int main() {

int value = 42;

std::thread t([value] {

std::cout << "Value is: " << value << std::endl;

});

t.join();

}

If you want to modify a captured variable, capture it by reference:

#include

#include

int main() {

int value = 0;

std::thread t([&value] {

value = 100;

});

t.join();

std::cout << "Value is now: " << value << std::endl;

}

Example

#include

#include

using namespace std;

int main() {

thread t([]() {

cout << "Hello from lambda!" << endl;

}); // Lambda runs inside thread

t.join();

cout << "Main thread done." << endl;

return 0;

}

What happens? The lambda function runs in the new thread, printing the message.

3. Function Object (Functor)

What is it?

What is a Function Object (Functor)?

function. This happens when a class defines a special function called operator(). Because of this, you can use an instance of that class just like you would call a normal function.

Function objects are very helpful in multithreading programming. They let you package both code and data inside an object that can be passed to threads easily. This makes your multithreading programs more flexible, organized, and easier to manage.

Example

#include

#include

using namespace std;

// Define a class with operator()

class Functor {

public:

void operator()() {

cout << "Hello from function object!" << endl;

}

};

int main() {

Functor f;

thread t(f); // Run the functor in a thread

t.join();

cout << "Main thread done." << endl;

return 0;

}

What happens? The thread calls operator() of the functor, printing the message.

4. Member Function (Static or Non-Static)

What is it?

A member function is a function that belongs to a class. There are two types:

- Static member function: This kind of function does not need an object to be called. You can call it directly using the class name.

- Non-static member function: This function needs an object of the class to work on because it usually uses the object’s data.

In multithreading programming, threads can run both static and non-static member functions. But when you want to run a non-static member function in a thread, you must give the thread the object it should work on.

Threads can run both types, but for non-static ones, you have to provide the object.

Example — Static Member Function

#include

#include

using namespace std;

class MyClass {

public:

static void staticFunc() {

cout << "Hello from static member function!" << endl;

}

};

int main() {

thread t(MyClass::staticFunc); // Run static function in thread

t.join();

cout << "Main thread done." << endl;

return 0;

}

Example — Non-Static Member Function

#include

#include

using namespace std;

class MyClass {

public:

void nonStaticFunc() {

cout << "Hello from non-static member function!" << endl;

}

};

int main() {

MyClass obj;

thread t(&MyClass::nonStaticFunc, &obj); // Pass function pointer and object

t.join();

cout << "Main thread done." << endl;

return 0;

}

Note: For non-static member functions, you must pass the object pointer as the first argument to the thread.

Summary for Multithreading

| Callable Type | How to Use in Thread | Example |

|---|---|---|

| Function | thread t(func); | void func() |

| Lambda Expression | thread t([](){ /* code */ }); | Inline anonymous function |

| Function Object | thread t(functorObj); | Class with operator() |

| Static Member Func | thread t(ClassName::func); | Static function of class |

| Non-Static Member Func | thread t(&Class::func, &obj); | Non-static func + object |

Let’s create a simple C++ program that demonstrates all four callable types running in separate threads. This will help you see how each callable works side-by-side.

#include

#include

using namespace std;

// 1. Regular Function

void regularFunction() {

cout << "Hello from regular function!" << endl;

}

// 3. Function Object (Functor)

class Functor {

public:

void operator()() {

cout << "Hello from function object!" << endl;

}

};

// 4. Class with static and non-static member functions

class MyClass {

public:

static void staticMemberFunction() {

cout << "Hello from static member function!" << endl;

}

void nonStaticMemberFunction() {

cout << "Hello from non-static member function!" << endl;

}

};

int main() {

// 1. Thread running a regular function

thread t1(regularFunction);

// 2. Thread running a lambda expression

thread t2([]() {

cout << "Hello from lambda expression!" << endl;

});

// 3. Thread running a function object

Functor functorObj;

thread t3(functorObj);

// 4a. Thread running a static member function

thread t4(&MyClass::staticMemberFunction);

// 4b. Thread running a non-static member function

MyClass obj;

thread t5(&MyClass::nonStaticMemberFunction, &obj);

// Wait for all threads to finish before exiting

t1.join();

t2.join();

t3.join();

t4.join();

t5.join();

cout << "Main thread finished." << endl;

return 0;

}

What happens here?

t1runs the regular functionregularFunction.t2runs an inline lambda that prints a message.t3runs a function object (Functor) using itsoperator().t4runs the static member function ofMyClass.t5runs the non-static member function of an objectobjofMyClass.

All threads run in parallel, and join() waits for each to complete before the program finishes.

Expected Output (order may vary due to threads running concurrently):

Hello from regular function!

Hello from lambda expression!

Hello from function object!

Hello from static member function!

Hello from non-static member function!

Main thread finished.

Thread Management in Multithreading C++

When working with threads in C++, the standard thread library provides many tools to control and coordinate threads effectively. These tools help you manage thread lifecycles, synchronize access to shared data, and optimize program performance. Let’s explore some important functions and classes used for thread management.

Key Thread Management Functions and Classes

| Function / Class | Purpose |

|---|---|

join() | Makes the current (calling) thread wait until the target thread finishes its work. |

detach() | Separates the thread from the main thread, letting it run independently without waiting. |

mutex | A locking mechanism that ensures only one thread accesses shared data at a time, preventing conflicts. |

lock_guard | A convenient wrapper around a mutex that locks it when created and automatically unlocks when destroyed (scope-based locking). |

condition_variable | Used for making threads wait for certain conditions to be true before continuing execution. |

atomic | Provides a way to safely read and modify shared variables between threads without explicit locks. |

sleep_for() | Pauses the current thread for a specified duration, like waiting for 1 second. |

sleep_until() | Pauses the current thread until a specific time point is reached. |

hardware_concurrency() | Returns the number of threads the system can run in parallel (usually equals CPU cores or hardware threads). Helps in optimizing thread usage. |

get_id() | Retrieves a unique identifier for the thread, useful for debugging or tracking thread activity. |

Detailed Explanation of Each Multithreading

1. join()

When you create a thread, the main program and the new thread run at the same time. If you want the main program to wait for the thread to finish before continuing, you use join().

thread t(func);

t.join(); // Main thread waits until t finishes

Without calling join(), the main program may finish and exit before the thread completes, causing unexpected behavior.

2. detach()

Sometimes, you want a thread to run on its own without the main program waiting for it. Calling detach() lets the thread run independently in the background.

thread t(func);

t.detach(); // Thread runs separately; main thread doesn't wait

Use this carefully because once detached, you can’t control or join that thread anymore.

3. mutex

When multiple threads access the same data, they might interfere with each other, causing errors (called data races). A mutex (short for mutual exclusion) prevents this by allowing only one thread to access the data at a time.

mutex mtx;

mtx.lock();

// Access shared data safely here

mtx.unlock();

4. lock_guard

Manually locking and unlocking mutexes can lead to mistakes, especially if your code has multiple return points or exceptions. lock_guard helps by automatically locking the mutex when it’s created and unlocking when it goes out of scope.

mutex mtx;

{

lock_guard lock(mtx);

// Safe access to shared data within this block

} // Mutex automatically unlocked here

5. condition_variable

Sometimes, one thread needs to wait until another thread signals it to continue, like waiting for a resource or a specific event. condition_variable helps threads to sleep and wake up efficiently based on conditions.

Example usage involves waiting and notifying:

wait()— thread sleeps until notified.notify_one()ornotify_all()— wake one or all waiting threads.

6. atomic

Using mutexes is safe but can sometimes slow down your program due to locking overhead. atomic variables allow threads to safely read and write shared data without locks by ensuring operations are indivisible (atomic).

#include

atomic counter(0);

counter++; // Safe increment from multiple threads

7. sleep_for() and sleep_until()

Sometimes, you may want a thread to pause for some time or until a specific clock time.

sleep_for(duration)pauses the thread for the given time.

this_thread::sleep_for(chrono::seconds(2)); // Sleep 2 seconds

sleep_until(time_point)pauses the thread until the given time.

auto wake_time = chrono::steady_clock::now() + chrono::seconds(5);

this_thread::sleep_until(wake_time); // Sleep until 5 seconds from now

8. hardware_concurrency()

This function tells you how many threads your CPU can run in parallel. You can use this to decide how many threads to create for best performance without overloading the system.

unsigned int n = thread::hardware_concurrency();

cout << "Number of hardware threads available: " << n << endl;

9. get_id()

Each thread has a unique ID, which you can get by calling get_id(). This is useful when you want to log or debug to know which thread is doing what.

thread::id this_id = this_thread::get_id();

cout << "Current thread ID: " << this_id << endl;

C++ provides many thread management tools to help you:

- Coordinate thread execution (

join,detach) - Protect shared data (

mutex,lock_guard,atomic) - Synchronize thread behavior (

condition_variable) - Control timing (

sleep_for,sleep_until) - Get system info for better performance (

hardware_concurrency) - Identify threads (

get_id)

Using these properly will make your multithreaded programs more reliable and efficient.

Problems with Multithreading in C++

Multithreading helps programs run faster by doing many things at once. But it also introduces some tricky problems that can cause your program to behave incorrectly or even crash. Understanding these problems is important for writing safe, reliable multithreaded code.

1. Deadlock

What is Deadlock?

Deadlock happens when two or more threads get stuck forever, each waiting for the other to release a resource (like a lock or mutex) they need. Because they wait on each other endlessly, none can continue, and the program freezes.

How Deadlock Happens — Example

Imagine two threads, Thread A and Thread B:

- Thread A locks Mutex 1 and waits to lock Mutex 2.

- Thread B locks Mutex 2 and waits to lock Mutex 1.

Both threads hold one mutex and wait for the other forever — this is a deadlock.

Visualization:

| Thread | Holds | Waiting For |

|---|---|---|

| A | Mutex 1 | Mutex 2 |

| B | Mutex 2 | Mutex 1 |

How to Avoid Deadlock?

- Always lock mutexes in the same order across all threads.

- Use

std::lockwhich can lock multiple mutexes without deadlock. - Keep critical sections short and release locks quickly.

- Avoid nested locks if possible.

2. Race Condition

What is a Race Condition?

A race condition happens when two or more threads access the same shared data at the same time, and at least one thread modifies it without proper synchronization. The result depends on the exact timing of threads, which can change every run.

Why is it a Problem?

The data can become corrupted or inconsistent because the operations overlap unpredictably. This leads to bugs that are hard to reproduce and fix.

Example of Race Condition:

int counter = 0;

void increment() {

for (int i = 0; i < 1000; i++) {

counter++; // Not thread-safe

}

}

int main() {

std::thread t1(increment);

std::thread t2(increment);

t1.join();

t2.join();

std::cout << counter << std::endl; // Might be less than 2000 due to race condition

}

Here, both threads try to update counter at the same time. Since counter++ is not atomic, some increments get lost.

How to Fix Race Condition?

- Use mutexes (

std::mutex) to protect shared data. - Use atomic operations (

std::atomic) for simple variables. - Design thread-safe data structures.

3. Starvation

What is Starvation?

Starvation happens when a thread waits indefinitely to get access to a resource because other threads keep getting priority or resources first.

Why Does it Happen?

If your synchronization mechanism favors some threads over others (e.g., high priority threads always run first), some threads may never get a chance to run or access needed resources.

Example Scenario:

- Several threads with high priority continuously lock a mutex.

- A low priority thread waits forever because it keeps getting preempted.

How to Avoid Starvation?

- Use fair locking algorithms like fair mutexes.

- Use condition variables to signal waiting threads.

- Avoid priority inversion by carefully managing thread priorities.

4. Thread Synchronization — The Solution

To solve or minimize these problems, thread synchronization is crucial.

What is Thread Synchronization?

It is a technique to control access to shared resources so that only one thread can use them at a time, preventing conflicts and corruption.

Common Synchronization Tools in C++:

- Mutex (

std::mutex)- Provides exclusive locking.

- Only one thread can lock it at a time.

- Other threads wait until the mutex is unlocked.

- Lock Guards (

std::lock_guard)- A convenient RAII wrapper that locks a mutex when created and unlocks when destroyed.

- Helps prevent forgetting to unlock.

std::mutex mtx; void safe_increment() { std::lock_guard<:mutex> lock(mtx); counter++; } - Unique Lock (

std::unique_lock)- More flexible than

lock_guard, supports manual locking/unlocking and deferred locking. - Works well with condition variables.

- More flexible than

- Condition Variables (

std::condition_variable)- Allow threads to wait for some condition to become true.

- Useful for producer-consumer problems and signaling between threads.

Problems & Solutions of Multithreading

| Problem | Cause | Result | Solution |

|---|---|---|---|

| Deadlock | Circular waiting for locked resources | Program freezes/stalls | Lock mutexes in order, use std::lock |

| Race Condition | Unsynchronized access/modification of shared data | Data corruption or incorrect results | Use mutexes or atomic variables |

| Starvation | Some threads get priority over others | Some threads never run | Use fair locks, manage thread priorities |

Tips for Beginners

- Always protect shared data with mutexes or atomics.

- Keep locks held for the shortest time possible.

- Avoid complex locking schemes that can cause deadlocks.

- Use tools like thread sanitizers (e.g., in clang/gcc) to detect race conditions.

- Write simple multithreaded code first and gradually add complexity.

What is a Context Switch in Multithreading?

A context switch is the process by which the CPU switches from executing one thread to executing another thread. Since the CPU can only run one thread at a time on a single core, it rapidly switches between multiple threads to give the illusion of parallelism.

Why is Context Switching Needed in Multithreading ?

- To allow multiple threads to share the CPU fairly.

- To handle multiple tasks efficiently, especially when some threads are waiting (e.g., for input/output).

- To improve overall system responsiveness.

What Happens During a Context Switch in Multithreading ?

When the CPU decides to switch from the currently running thread (let’s call it Thread A) to another thread (Thread B), it needs to:

- Save the State of Thread A:

This includes the thread’s CPU registers, program counter (the address of the next instruction to execute), stack pointer, and other critical information that defines exactly where Thread A was in its execution. - Load the State of Thread B:

Restore the saved CPU registers, program counter, stack pointer, etc., of Thread B so it can continue from where it left off. - Resume Execution of Thread B:

The CPU then starts executing instructions of Thread B.

What is Stored in the Context in Multithreading ?

- CPU registers (general purpose registers).

- Program counter (instruction pointer).

- Stack pointer (to track function calls).

- Possibly other hardware-specific information.

Overhead of Context Switching in Multithreading

- Context switching is not free — it takes time and CPU cycles.

- Frequent context switches can reduce overall performance due to this overhead.

- Operating systems and runtime schedulers try to minimize unnecessary context switches.

Summary of Context Switching in Multithreading

| Term | Meaning |

|---|---|

| Context | The saved state of a thread (registers, PC, stack pointer, etc.) |

| Context Switch | Saving the current thread’s context and loading another thread’s context to resume its execution |

How context switching is handled differently in user-level threads vs kernel-level threads, or provide simple code examples demonstrating multithreading behavior!

1. join() Example

#include

#include

using namespace std;

void task() {

cout << "Thread is running..." << endl;

}

int main() {

thread t(task);

t.join(); // Wait for thread to finish

cout << "Main thread finished after join." << endl;

return 0;

}

2. detach() Example

#include

#include

#include

using namespace std;

void task() {

this_thread::sleep_for(chrono::seconds(2));

cout << "Detached thread finished work." << endl;

}

int main() {

thread t(task);

t.detach(); // Thread runs independently

cout << "Main thread continues without waiting." << endl;

this_thread::sleep_for(chrono::seconds(3)); // Wait to see detached thread output

return 0;

}

3. mutex and lock_guard Example

#include

#include

#include

using namespace std;

mutex mtx;

int counter = 0;

void increment() {

for (int i = 0; i < 1000; ++i) {

lock_guard lock(mtx); // Lock mutex safely

++counter;

}

}

int main() {

thread t1(increment);

thread t2(increment);

t1.join();

t2.join();

cout << "Counter value: " << counter << endl; // Should be 2000

return 0;

}

4. condition_variable Example

#include

#include

#include

#include

using namespace std;

mutex mtx;

condition_variable cv;

bool ready = false;

void waitForWork() {

unique_lock lock(mtx);

cv.wait(lock, [] { return ready; }); // Wait until ready == true

cout << "Worker thread started after notification." << endl;

}

void setReady() {

{

lock_guard lock(mtx);

ready = true;

}

cv.notify_one(); // Notify waiting thread

}

int main() {

thread worker(waitForWork);

this_thread::sleep_for(chrono::seconds(1));

setReady();

worker.join();

return 0;

}

5. atomic Example

#include

#include

#include

using namespace std;

atomic counter(0);

void increment() {

for (int i = 0; i < 1000; ++i) {

++counter; // Safe without mutex

}

}

int main() {

thread t1(increment);

thread t2(increment);

t1.join();

t2.join();

cout << "Atomic counter value: " << counter << endl; // Should be 2000

return 0;

}

6. sleep_for() and sleep_until() Example

#include

#include

#include

using namespace std;

int main() {

cout << "Sleeping for 2 seconds..." << endl;

this_thread::sleep_for(chrono::seconds(2));

auto wakeTime = chrono::steady_clock::now() + chrono::seconds(3);

cout << "Sleeping until 3 seconds from now..." << endl;

this_thread::sleep_until(wakeTime);

cout << "Awake now!" << endl;

return 0;

}

7. hardware_concurrency() Example

#include

#include

using namespace std;

int main() {

unsigned int n = thread::hardware_concurrency();

cout << "This system can run " << n << " threads concurrently." << endl;

return 0;

}

8. get_id() Example

#include

#include

using namespace std;

void printThreadId() {

cout << "Thread ID: " << this_thread::get_id() << endl;

}

int main() {

thread t(printThreadId);

t.join();

cout << "Main thread ID: " << this_thread::get_id() << endl;

return 0;

}

You can also Visit other tutorials of Embedded Prep

- Multithreading Interview Questions

- Multithreading in Operating System

- Multithreading in Java

- POSIX Threads pthread Beginner’s Guide in C/C++

- Speed Up Code using Multithreading

- Limitations of Multithreading

- Common Issues in Multithreading

- Multithreading Program with One Thread for Addition and One for Multiplication

- Advantage of Multithreading

- Disadvantages of Multithreading

- Applications of Multithreading: How Multithreading Makes Modern Software Faster and Smarter”

Special thanks to @mr-raj for contributing to this article on Embedded Prep

Mr. Raj Kumar is a highly experienced Technical Content Engineer with 7 years of dedicated expertise in the intricate field of embedded systems. At Embedded Prep, Raj is at the forefront of creating and curating high-quality technical content designed to educate and empower aspiring and seasoned professionals in the embedded domain.

Throughout his career, Raj has honed a unique skill set that bridges the gap between deep technical understanding and effective communication. His work encompasses a wide range of educational materials, including in-depth tutorials, practical guides, course modules, and insightful articles focused on embedded hardware and software solutions. He possesses a strong grasp of embedded architectures, microcontrollers, real-time operating systems (RTOS), firmware development, and various communication protocols relevant to the embedded industry.

Raj is adept at collaborating closely with subject matter experts, engineers, and instructional designers to ensure the accuracy, completeness, and pedagogical effectiveness of the content. His meticulous attention to detail and commitment to clarity are instrumental in transforming complex embedded concepts into easily digestible and engaging learning experiences. At Embedded Prep, he plays a crucial role in building a robust knowledge base that helps learners master the complexities of embedded technologies.

Getting it proper in the conk, like a worried would should

So, how does Tencent’s AI benchmark work? Earliest, an AI is the genuineness a indigenous dial to account from a catalogue of as deluge 1,800 challenges, from edifice effect visualisations and интернет apps to making interactive mini-games.

Post-haste the AI generates the jus civile ‘formal law’, ArtifactsBench gets to work. It automatically builds and runs the jus gentium ‘widespread law’ in a ring as the bank of england and sandboxed environment.

To visualize how the citation behaves, it captures a series of screenshots ended time. This allows it to unusual in seeking things like animations, decree changes after a button click, and other effective benumb feedback.

In the incontrovertible, it hands to the loam all this asseverate – the autochthonous аск on account of, the AI’s jurisprudence, and the screenshots – to a Multimodal LLM (MLLM), to law as a judge.

This MLLM adjudicate isn’t in wonky giving a unspecified мнение and as an substitute uses a proceedings, per-task checklist to armies the conclude across ten weaken dippy metrics. Scoring includes functionality, dope dial, and the unvarying aesthetic quality. This ensures the scoring is bare, concordant, and thorough.

The powerful doubtlessly is, does this automated reviewer in godly assurance outing honoured taste? The results cite it does.

When the rankings from ArtifactsBench were compared to WebDev Arena, the gold-standard representation where bona fide humans философема on the most apt AI creations, they matched up with a 94.4% consistency. This is a elephantine unthinkingly from older automated benchmarks, which separate managed on all sides of 69.4% consistency.

On nadir of this, the framework’s judgments showed across 90% concurrence with okay thronging developers.

https://www.artificialintelligence-news.com/

Getting it look, like a agreeable would should

So, how does Tencent’s AI benchmark work? Maiden, an AI is foreordained a prototype subject from a catalogue of during 1,800 challenges, from approach materials visualisations and царство безграничных возможностей apps to making interactive mini-games.

At the unchangeable even so the AI generates the rules, ArtifactsBench gets to work. It automatically builds and runs the lex non scripta ‘low-class law in a sheltered and sandboxed environment.

To envision how the assiduity behaves, it captures a series of screenshots on the other side of time. This allows it to corroboration against things like animations, bring out changes after a button click, and other high-powered benumb feedback.

Lastly, it hands terminated all this evince – the firsthand solicitation, the AI’s cryptogram, and the screenshots – to a Multimodal LLM (MLLM), to realize upon the allowance as a judge.

This MLLM authorization isn’t unconditional giving a inexplicit тезис and as an surrogate uses a agency, per-task checklist to move the conclude across ten pull metrics. Scoring includes functionality, proprietress circumstance, and taciturn aesthetic quality. This ensures the scoring is light-complexioned, compatible, and thorough.

The steadfast doubtlessly is, does this automated reviewer in with respect to make an effort to of items comprise incorruptible taste? The results vehicle it does.

When the rankings from ArtifactsBench were compared to WebDev Arena, the gold-standard podium where excusable humans on on the choicest AI creations, they matched up with a 94.4% consistency. This is a elephantine jump from older automated benchmarks, which not managed ’round 69.4% consistency.

On home in on of this, the framework’s judgments showed in de trop of 90% tails of with maven at all manlike developers.

https://www.artificialintelligence-news.com/

https://www.pf-monstr.work – работа с ПФ

ремонт мерседес – комплексный ремонт любой сложности

https://www.servismersedes2.ru/ – Основная версия сайта

ремонт мерседес – срочный ремонт любой сложности

pf-monstr.work – работа с ПФ

http://www.servismersedes2.ru – Дополнительный адрес в сети

http://www.pf-monstr.work – SEO продвижение с ПФ

servismersedes2.ru – Специализированный центр с оригинальными запчастями

https://www.pf-monstr.work – улучшение ПФ сайта

https://servismersedes2.ru – Диагностика мультиконтурных сидений

сервис мерседес бенц – обслуживание для вашего Mercedes-Benz

http://servismersedes2.ru/ – Экспресс сервис без потери качества работ

http://servismersedes2.ru – Круглосуточная техническая поддержка

Excellent move-out service, got our deposit back completely. Booking for our next property. Transition heroes.